a5cent

New member

- Nov 3, 2011

- 6,622

- 0

- 0

The slides i linked are from ARM not from Qualcomm.

Yeah. What I should have said is that I'd prefer a source that isn't directly involved in selling the architecture, which excludes both Qualcomm and ARM. Sorry about that.

Geekbench3 is not compiled for NEON (nor SSE/AVX for that matter). <snipped>

Most of the improvements of the ARM v8 ISA directly aim at performance gains. Take the register set as example. You just don't increase your register set from 16 to 32 if you would not expect significant gains from that. Its not just the gates of the registers and multiplexer you would waste, but you are loosing 3 bits of instruction encoding space, which is very precious when you want to stay at fixed length 32 bit instructions. Its not only large functions, which gain from increased register set but also small leaf functions in the call graph, because they now have 8 scratch registers available.

If it's not NEON, then those benchmarks are using some other ARMv8 hardware accelerated feature to misrepresent what should be a measure of general and pure integer performance. Maybe their integer tests are running AES or SHA1 cyphers which the CPU offloads to ARMv8's hardware accelerated cypher units. Either way, it's extremely fishy.

I understand that the ARMv8 ISA includes many advancements . I'm not saying they are irrelevant. They do matter when performing very specific tasks. However, despite all the advancements you mentioned, there is still no way those will get us to general performance improvements in the range of >=20%, just by recompiling to a new ISA. Not happening. I'm not buying it.

The nice thing about synthetic benchmarks is, that they are not reliant on the OS or other libraries. Side effects from updated OS/libraries are therefore removed.

Agreed. On the other hand, I don't know anybody that uses their smartphone primarily to run dhrystone, AES, or other such algorithms which fit entirely into a CPU's L1 cache. That's the only sort of thing Geekbench really tests. Such tests have their place if you're talking about CPU theory and want to understand very specific strengths and weaknesses of a given CPU architecture. They are almost meaningless if we're trying to gauge how a CPU will perform in average, everyday computing tasks which Joe and Jane care about. Unfortunately, if we want to measure the impact of the ARMv8 ISA on the types of things that actually matter to Joe and Jane, we have no choice but to measure OS glitches and inconsistencies along with everything else. IMHO that's still far more interesting than what Geekbench provides, even if it isn't as pure. To convey the stats that actually mater to most users, I prefer something like PCMark.

In addition there is a Cortex A57 version of the Note 4 (version N910C with Exynos 5433). Note 4 was never updated to 64 bit Android. So there you have a comparison right at hand.

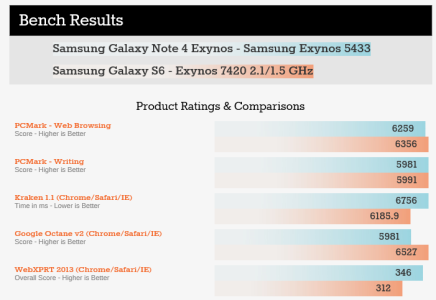

Okay, thanks for pointing that out. I've taken a closer look and the two devices you mentioned. These are my results:

| GPU | CPU | Clock | Clock % | |

| Samsung Galaxy Note 4 | Mali-T760 MP6 | Exynos 5433 / 4x A57 | 1.9 GHz | 100% |

| Samsung Galaxy S6 | Mali-T760 MP8 | Exynos 7420 / 4x A57 | 2.1 GHz | 110.5% |

As the GPU in the S6 is quite a bit more powerful than the GPU in the Note 4, we must disregard any graphics/video related benchmarks (video playback, photo editing, 3D rendering etc). We'd also have to compensate for the Galaxy S6's 10.5% higher clock rate. These are the benchmarks from Anandtech's bench, which most closely correlate with what we're trying to measure:

source

These benchmarks are a far more accurate representation of what to expect in real world usage. They mimic the CPU workloads created by browsing the web, or editing a document on your phone. The results are either very similar for both CPUs, or they quite accurately reflect the 10% bump in clock rate the S6 has over the Note 4. Almost all the other results listed in Anandtech's bench are GPU related, so we can't rely on them to tell us anything about ARMv8. If both CPUs had the same clock rate, the measured results would be pretty much identical across the board.

This is pretty much exactly what I expected to find. In everyday use, there is little to no discernible difference between 32bit ARMv7 and 64bit ARMv8 code when run on the same CPU. If the general >=20% improvement was in any way accurate, we'd have seen that reflected in these benchmarks.

Last edited: